In a 6/13/18 article, David Atkins provides a critique of the assumptions behind the Law et al article titled: “Land use strategies to mitigate climate change in carbon dense temperate forests” and shows how hypothetical science can and has been used, without any caveat, to provide some groups with slogans that meet their messaging needs instead of waiting for validation of the hypothesis and thereby considering the holistic needs of the world.

I) BACKGROUND

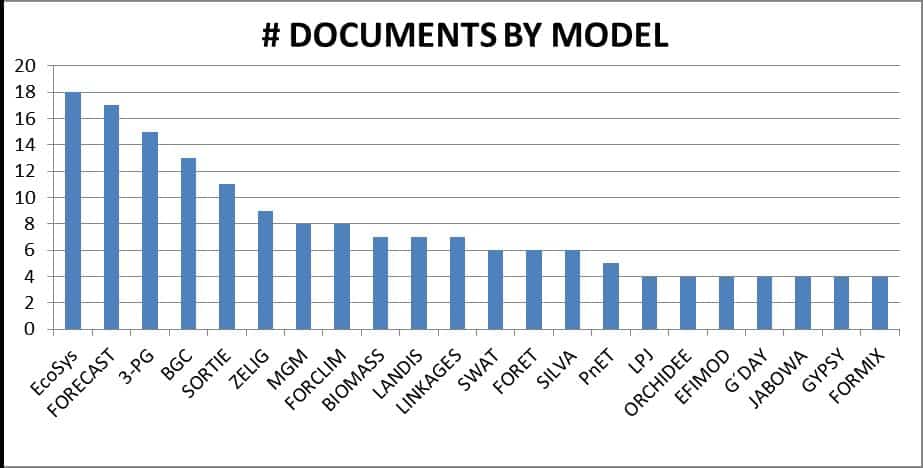

The noble goal of Law et. al. is to determine the “effectiveness of forest strategies to mitigate climate change”. They state that their methodology “should integrate observations and mechanistic ecosystem process models with future climate, CO2, disturbances from fire, and management.”

A) The generally (ignoring any debate over the size of the percentage increase) UNCONTESTED points regarding locking up more carbon in the Law et. al. article are as follows:

1) Reforestation on appropriate sites – ‘Potential 5% improvement in carbon storage by 2100’

2) Afforestation on appropriate sites – ‘Potential 1.4% improvement in carbon storage by 2100′

B) The CONTESTED points regarding locking up 17% more carbon by 2100 in the Law et. al. article are as follows:

1) Lengthened harvest cycles on private lands

2) Restricting harvest on public lands

C) Atkins, at the 2018 International Mass Timber Conference protested by Oregon Wild, notes that: “Oregon Wild (OW) is advocating that storing more carbon in forests is better than using wood in buildings as a strategy to mitigate climate change.” OW’s first reference from Law et. al. states: “Increasing forest carbon on public lands reduced emissions compared with storage in wood products” (see Law et. al. abstract). Another reference quoted by OW from Law et. al. goes so far as to claim that: “Recent analysis suggests substitution benefits of using wood versus more fossil fuel-intensive materials have been overestimated by at least an order of magnitude.”

II) Law et. al. CAVEATS ignored by OW

A) They clearly acknowledge that their conclusions are based on computer simulations (modeling various scenarios using a specific set of assumptions subject to debate by other scientists).

B) In some instances, they use words like “probably”, “likely” and “appears” when describing some assumptions and outcomes rather than blindly declaring certainty.

III) Atkins’ CRITIQUE

Knowing that the modeling used in the Law et. al. study involves significant assumptions about each of the extremely complex components and their interactions, Atkins proceeds to investigate the assumptions which were used to integrate said models with the limited variables mentioned and shows how they overestimate the carbon cost of using wood, underestimate the carbon cost of storing carbon on the stump and underestimate the carbon cost of substituting non-renewable resources for wood. This allows Oregon Wild to tout unproven statements as quoted in item “I-C” above and treat them as fact and justification for policy changes instead of as an interesting but unproven hypothesis that needs to be validated in order to complete the scientific process.

Quotes from Atkins Critique:

A) Wood Life Cycle Analysis (LCA) Versus Non-renewable substitutes.

1) “The calculation used to justify doubling forest rotations assumes no leakage. Leakage is a carbon accounting term referring to the potential that if you delay cutting trees in one area, others might be cut somewhere else to replace the gap in wood production, reducing the supposed carbon benefit.”

2) “It assumes a 50-year half-life for buildings instead of the minimum 75 years the ASTM standard calls for, which reduces the researchers’ estimate of the carbon stored in buildings.”

3) “It assumes a decline of substitution benefits, which other LCA scientists consider as permanent.”

4) “analysis chooses to account for a form of fossil fuel leakage, but chooses not to model any wood harvest leakage.”

5) “A report published by the Athena Institute in 2004, looked at actual building demolition over a three-plus-year period in St. Paul, Minn. It indicated 51 percent of the buildings were older than 75 years. Only 2 percent were demolished in the first 25 years and only 12 percent in the first 50 years.”

6) “The Law paper assumes that the life of buildings will get shorter in the future rather than longer. In reality, architects and engineers are advocating the principle of designing and building for longer time spans – with eventual deconstruction and reuse of materials rather than disposal. Mass timber buildings substantially enhance this capacity. There are Chinese Pagoda temples made from wood that are 800 to 1,300 years old. Norwegian churches are over 800 years old. I visited at cathedral in Scotland with a roof truss system from the 1400s. Buildings made of wood can last for many centuries. If we follow the principle of designing and building for the long run, the carbon can be stored for hundreds of years.”

7) “The OSU scientists assumed wood energy production is for electricity production only. However, the most common energy systems in the wood products manufacturing sector are combined heat and power (CHP) or straight heat energy production (drying lumber or heat for processing energy) where the efficiency is often two to three times as great and thus provides much larger fossil fuel offsets than the modeling allows.”

8) “The peer reviewers did not include an LCA expert.”

9) The Dean of the OSU College of Forestry was asked how he reconciles the differences between two Doctorate faculty members when the LCA Specialist (who is also the director of CORRIM which is a non-profit that conducts and manages research on the environmental impacts of production, use, and disposal of forest products). The Dean’s answer was “It isn’t the role of the dean to resolve these differences, … Researchers often explore extremes of a subject on purpose, to help define the edges of our understanding … It is important to look at the whole array of research results around a subject rather than using those of a single study or publication as a conclusion to a field of study.”

10) Alan Organschi, a practicing architect, a professor at Yale stated his thought process as “There is a huge net carbon benefit [from using wood] and enormous variability in the specific calculations of substitution benefits … a ton of wood (which is half carbon) goes a lot farther than a ton of concrete, which releases significant amounts of carbon during a building’s construction”. He then paraphrased a NASA climate scientistfrom the late 1980’s who said ‘Quit using high fossil fuel materials and start using materials that sink carbon, that should be the principle for our decisions.’

11) The European Union, in 2017, based on “current literature”, called “for changes to almost double the mitigation effects by EU forests through Climate Smart Forestry (CSF). … It is derived from a more holistic and effective approach than one based solely on the goals of storing carbon in forest ecosystems”

12) Various CORRIM members stated:

a) “Law et al. does not meet the minimum elements of a Life Cycle Assessment: system boundary, inventory analysis, impact assessment and interpretation. All four are required by the international standards (ISO 14040 and 14044); therefore, Law et al. does not qualify as an LCA.”

b) “What little is shared in the article regarding inputs to the simulation model ignores the latest developments in wood life cycle assessment and sustainable building design, rendering the results at best inaccurate and most likely incorrect.”

c) “The PNAS paper, which asserts that growing our PNW forests indefinitely would reduce the global carbon footprint, ignores that at best there would 100 percent leakage to other areas with lower productivity … which will result in 2 to 3.5 times more acres harvested for the same amount of building materials. Alternatively, all those buildings will be built from materials with a higher carbon footprint, so the substitution impact of using fossil-intensive products in place of renewable low carbon would result in >100 percent leakage.”

d) More on leakage: “In 2001, seven years after implementation, Jack Ward Thomas, one of the architects of the plan and former chief of the U.S. Forest Service, said: “The drop in the cut in the Pacific Northwest was essentially replaced by imports from Canada, Scandinavia and Chile … but we haven’t reduced our per-capita consumption of wood. We have only shifted the source.”

e) “Bruce Lippke, professor emeritus at the University of Washington and former executive director of CORRIM said, “The substitution benefits of wood in place of steel or concrete are immediate, permanent and cumulative.””

B) Risks Resulting from High Densities of Standing Timber

1) “The paper underestimates the amount of wildfire in the past and chose not to model increases in the amount of fire in the future driven by climate change.”

2) “The authors chose to treat the largest fire in their 25-year calibration period, the Biscuit Fire (2003), as an anomaly. Yet 2017 provided a similar number of acres burned. … the model also significantly underestimated five of the six other larger fire years ”

3) “The paper also assumed no increase in fires in the future”

4) Atkins comments/quotes support what some of us here on the NCFP blog have been saying for years regarding storing more timber on the stump. There is certainty that a highly significant increase in carbon loss to fire, insects and disease will result from increased stand densities as a result of storing more carbon on the stump on federal lands. Well documented, validated and fundamental plant physiology and fire science can only lead us to that conclusion. Increases in drought caused by global warming will only increase the stress on already stressed, overly dense forests and thereby further decrease their viability/health by decreasing the availability of already limited resources such as access to minerals, moisture and sunlight while providing closer proximity between trees to ease the ability and rate of spread of fire, insects and disease between adjacent trees.

Footnote:

In their conclusion, Law et. al. state that“GHG reduction must happen quickly to avoid surpassing a 2°C increase in temperature since preindustrial times.” This emphasis leads them to focus on strategies which, IMHO, will only exacerbate the long-term problem.

→ For perspective, consider the “Failed Prognostications of Climate Alarm”