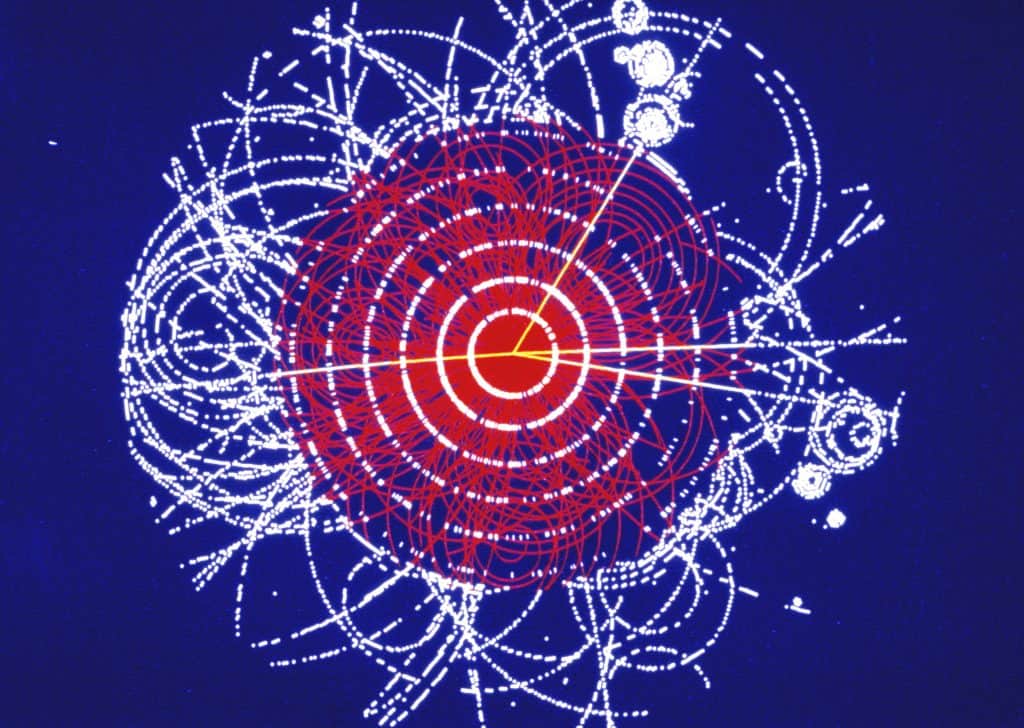

Science & Society Picture Library / Getty

When we talk about “science tells us” and so on, sometimes it’s good to reflect on the abstraction of “science” and what makes any human activity “science.”

Dan Sarewitz looked at a couple of different perspectives, from theoretical physics to improving antifreeze formulations in this piece..

What, then, joins Hossenfelder’s field of theoretical physics to ecology, epidemiology, cultural anthropology, cognitive psychology, biochemistry, macroeconomics, computer science, and geology? Why do they all get to be called science? Certainly it is not similarity of method. The methods used to search for the subatomic components of the universe have nothing at all in common with the field geology methods in which I was trained in graduate school. Nor is something as apparently obvious as a commitment to empiricism a part of every scientific field. Many areas of theory development, in disciplines as disparate as physics and economics, have little contact with actual facts, while other fields now considered outside of science, such as history and textual analysis, are inherently empirical. Philosophers have pretty much given up on resolving what they call the “demarcation problem,” the search for definitive criteria to separate science from nonscience; maybe the best that can be hoped for is what John Dupré, invoking Wittgenstein, has called a “family resemblance” among fields we consider scientific. But scientists themselves haven’t given up on assuming that there is a single thing called “science” that the rest of the world should recognize as such.

The demarcation problem matters because the separation of science from nonscience is also a separation of those who are granted legitimacy to make claims about what is true in the world from the rest of us Philistines, pundits, provocateurs, and just plain folks. In a time when expertise and science are supposedly under attack, some convincing way to make this distinction would seem to be of value. Yet Hossenfelder’s jaunt through the world of theoretical physics explicitly raises the question of whether the activities of thousands of physicists should actually count as “science.” And if not, then what in tarnation are they doing?

When Hossenfelder writes about “science” or the “scientific method” she seems to have in mind a reasoning process wherein theories are formulated to extend or modify our understanding of the world and those theories in turn generate hypotheses that can be subjected to experimental or observational confirmation—what philosophers call “hypothetico-deductive” reasoning. This view is sensible, but it is also a mighty weak standard to live up to. Pretty much any decision is a bet on logical inferences about the consequences of an intended action (a hypothesis) based on beliefs about how the world works (theories). We develop guiding theories (prayer is good for you; rotate your tires) and test their consequences through our daily behavior—but we don’t call that science. We can tighten up Hossenfelder’s apparent definition a bit by stipulating that hypothesis-testing needs to be systematic, observations carefully calibrated, and experiments adequately controlled. But this has the opposite problem: It excludes a lot of activity that everyone agrees is science, such as Darwin’s development of the theory of natural selection, and economic modeling based on idealized assumptions like perfect information flow and utility-maximizing human decisions.

Of course the standard explanation of the difficulties with theoretical physics would simply be that science advances by failing, that it is self-correcting over time, and that all this flailing about is just what has to happen when you’re trying to understand something hard. Some version of this sort of failing-forward story is what Hossenfelder hears from many of her colleagues. But if all this activity is just self-correction in action, then why not call alchemy, astrology, phrenology, eugenics, and scientific socialism science as well, because in their time, each was pursued with sincere conviction by scientists who believed they were advancing reliable knowledge about the world? On what basis should we say that the findings of science at any given time really do bear a useful correspondence to reality? When is it okay to trust what scientists say? Should I believe in susy or not? The popularity of general-audience books about fundamental physics and cosmology has long baffled me. When, say, Brian Greene, in his 1999 bestseller The Elegant Universe, writes of susy that “Since supersymmetry ensures that bosons and fermions occur in pairs, substantial cancellations occur from the outset—cancellations that significantly calm some of the frenzied quantum effects,” should I believe that? Given that (despite my Ph.D. in a different field of science) I don’t have a prayer of understanding the math behind susy, what does it even mean to “believe” such a statement? How would it be any different from “believing” Genesis or Jabberwocky? Hossenfelder doesn’t seem so far from this perspective. “I don’t see a big difference between believing nature is beautiful and believing God is kind.”

Given the amount of flailing I do on a daily basis, I must be a scientist! 😉

Science isn’t perfect but it sure beats the alternative, unfounded opinion, which appears in this forum a lot. Maybe we need a post about the valuable intellectual traits for critical thinking.

https://web.archive.org/web/20040803030458/http://www.criticalthinking.org:80/University/intraits.html

Our land management agencies are required to use best available science and use it appropriately. NEPA requires federal agencies to rely upon “high quality” information and “accurate scientific analysis.” 40 C.F.R. § 1500.1(b). The scientific information upon which an agency relies must be of “high quality because accurate scientific analysis, expert agency comments, and public scrutiny are essential to implementing NEPA.” Idaho Sporting Congress v. Thomas, 137 F.3d 1146, 1151 (9th Cir. 1998) (internal quotations omitted); see also Portland Audubon Society v. Espy, 998 F.2d 699, 703 (9th Cir. 1993) (overturning decision which “rests on stale scientific evidence, incomplete discussion of environmental effects . . . and false assumptions”)

During ESA Section 7 consultation, the agency “shall use the best scientific and commercial data available.” 16 U.S.C. § 1536(a)(2). “[T]he Federal agency requesting formal consultation,” “shall provide the Service with the best scientific and commercial data available or which can be obtained during the consultation,” to serve as the basis for the Fish and Wildlife Service’s subsequent BO. 50 C.F.R. 402.14(d).

Given how much we still have to learn about ecosystems and the effects of human management, all land management should be conducted within a framework of intentional learning, with constant monitoring and feedback between management and the consequences of management. Consider the adaptive management framework and methods described in V. Sit and B. Taylor, eds., Statistical methods for adaptive management studies. B.C. Ministry of Forests Research Branch, Victoria, B.C. http://www.for.gov.bc.ca/hfd/pubs/docs/lmh/lmh42.htm For instance, the agency should disclose the reliability of the scientific studies and other evidence that is used to support the NEPA analysis and the decision.

Marcot, B. G. 1998. Selecting appropriate statistical procedures and asking the right questions: a synthesis. Pp. 129-142. in V. Sit and B. Taylor, eds., Statistical methods for adaptive management studies. B.C. Ministry of Forests Research Branch, Victoria, B.C. http://www.for.gov.bc.ca/hfd/pubs/docs/lmh/lmh42.htm

A lot of people appear to suffer from a mental condition known as “Belief Perseverance” —

Anderson, C.A. (2007). Belief perseverance (pp. 109-110). In R. F. Baumeister & K. D. Vohs (Eds.), Encyclopedia of Social Psychology. Thousand Oaks, CA: Sage. http://www.psychology.iastate.edu/faculty/caa/abstracts/2005-2009/07a.pdf

Then again, scientific opinions are where it’s at in Forestry, when dealing with site-specific conditions. You can check the offensive comments at the door, pretending that some of us reject science. Are you looking to upgrade from a ‘broad brush’?

Second thanks for posting the traits for critical thinking.. oddly I just graduated from a school which was supposed to teach critical thinking, but did not reflect those values in practice (liberal arts university).

As to adaptive management, various efforts have been tried including the Adaptive Areas (???) in the NW Forest Plan. Does someone know of a paper to show how that worked?

The below is an interesting quote..

“[E]xpert judgement cannot replace statistically sound experiments.” … Anecdotes and expert judgement alone are not recommended for evaluating management actions because of their low reliability and unknown bias.”

What is a statistically sound experiment? Back in the old days (at OSU) we had designed experiments where we varied treatments and watched what happened. Think seedlings and nursery treatments. Experimental forests were designed for long term studies. But it seems to me like many studies we have looked at today are not designed experiments- they take existing data and make a series of assumptions- based on the nature of the question you probably can’t do a designed experiment. They are modeling exercises, which are fine, but they are not the same as a statistically sound designed experiment.

It seems to me that Gluckman’s idea of extended peer review with practitioners would make scientific information more meaningful. I see both/and,not either/or, in terms of involvement of scientists of varying disciplines and an array of practitioners.

Since there isn’t a long-term study completed on the effects of thinning in the Sierra Nevada, that doesn’t mean we shouldn’t do it. There will be no “latest science” contradicting current styles of active forest management in the Sierra Nevada. Measuring the benefits of the current style of thinning over 5 decades would be nice but, I doubt that even is possible within the government.