One of the challenges facing policy folks and politicians today is “how seriously should we take the outputs of models”? Countries spend enormous amounts of research funds attempting to predict changes from climate change. How far down the predictive ladder from GCM’s to “the distribution of this plant species in 2050” or “how much carbon will be accumulated by these trees in 2100” can we go, before we say “probably not worth calculating, let’s spend the bucks on CCS research or other methods of actually fixing the climate problem.”.

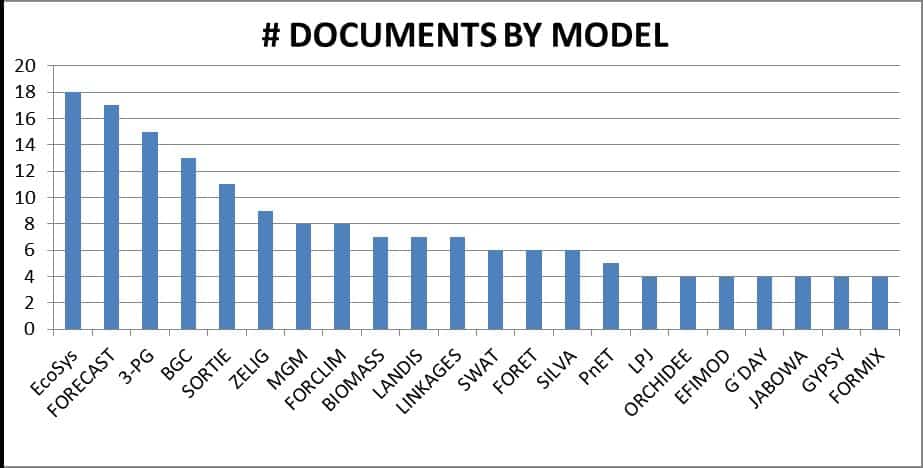

There have been several recent papers and op-eds looking at modeling with regards to economics and climate. And because the models used by IPCC include economic prediction to predict future climate, there is an obvious linkage. Before we get to those, though, I’d like to share some personal history of modeling efforts back in the relatively dark ages (1970s, that is 50 years ago). Modeling came about because of the availability of computer programs. I actually took a graduate class in FORTRAN programming at Yale for credit towards my degree. My first experience with models was with Dr. Dave Smith, our silviculture professor at Yale, talking about JABOWA (this simulation model is still available here on Dan Botkin’s website). Back in the 70’s, the model was pretty primitive. Dr. Smith was skeptical. He told us students something like “”that model would grow trees to 200 feet, but when they know they only grow to 120 feet, they just but a card in that says “if height is greater than 120, height equals 120.”” The idea at the time was that models would be helpful for scientists to explore what parameters are important, and then to test their hypotheses derived from the models with real world data. I also remember Dr. Franklin commenting on Dr. Cannell’s physiological models (Cannell is Austrialian) “it’s modeling to you, but it sure sounds like muddling to me.” Back in those days, the emphasis was on collecting data with modeling as an approach to help understand real world data.

It is interesting, and perhaps understandable, that as models improved, that the concept of “what models are good for” would change to “if we could predict the future using models, we would make better decisions.” Hence, the use of models in decision-making.

I’m not saying that they’re all unhelpful (say, fire models), but there is a danger in taking them too far in decision-making, not considering the disciplinary and cross-disciplinary controversies with each one, not linking them to real-world tests of their accuracy, or especially having open discussions of accuracy and utility with stakeholders, decision-makers and experts. Utility, as we shall see in the papers in the next posts, is in the eye of the beholder. Even the least scientifically “cool” model (say, compared to GCM’s), an herbicide drift model, or a growth and yield model, usually has a feedback mechanism by which you contact a human being somewhere whose job it is to make tweaks to the model to improve it. The more complex the model, though, the less likely there is a guru (such as a Forplan guru-remember them?) who understands all the moving parts and can make appropriate changes. The more complex, therefore, the more acceptance becomes “belief in its community of builders and their linkages” rather than “outputs we can test.” And for predictive models, empirical tests are conceptually pretty difficult because just because you predicted 2010 well doesn’t mean you will predict 2020 or 2100 well.

And at some level of complexity, it’s all about trust and not so much about data at all. But who decides where the model fits between “better than nothing, I guess” and “oracle”? And on what basis?

Please feel free to share your own experiences, successes and not so much, with models and their use in decision-making.

You’ve been busy since I last popped in, Sharon. Love the new page format!

As to your post, I think you might find the following link intriguing. There, Steve Keen takes Nordhaus apart limb from limb. To your point, I would add that just like programming, you get the garbage out that you put in. Nordhaus’s model is garbage because that’s what he put in it. Ask first, WHO is the modeler. What biases do they bring to the model? What qualifications? And, what expectations? If these all pass the sniff test, one probably has a model that can be relied on to, at least, inform one of future potentialities.

Here’s the link, or rather, the link to naked capitalism (which I love and visit every day for the news) that links to the link: https://www.nakedcapitalism.com/2019/07/the-cost-of-climate-change.html

Enjoy! I sure did.

Thanks Eric, good to see you here!

My problem with Nordhaus’s work and his critics both is that I think that the idea that trying to predict the unpredictable (what climate change will cost by degree, over a long term), not to speak of arguing how to predict the unpredictable, is a waste of time. It doesn’t really matter if people are biased or not because (1) we don’t know how things will change (2) and we don’t know how much to attribute to climate change.

We’re supposed to believe that if we knew how much it costs, we could make wiser decisions. But we can’t know. Let’s just take cost of wildfires, say in California. How much is due to past fire suppression practices? How much is due to building with burnable substances around (oh well, that is hard to avoid in California)? How much is due to the climate separating out those influences? If we blame cheatgrass for changing fire regimes, is cheatgrass “due to climate change” or separate or some kind of interaction? How could we possible quantify it?

Somehow we are supposed to believe that people can roundup unknowns into estimates (for the world! Are you kidding me?) that are worthwhile. I, personally, am not buying it.

It’s statistics, Sharon. It’s a priori assumed we’ll never know for certain. Thus, the last sentence in my original comment “a model that can be relied on to, at least, inform one of future potentialities.”

They can be relied on to inform one of some but not all future potentialities. That’s why people have different views of how much to rely on them, and for what.

I am not familiar with Nordhaus’s work specifically but I do work with wildfire modeling. While attempts to estimate the cost of climate change is incredibly complicated and there will undoubtedly be unknown effects that will not be accounted for I don’t think that is any reason not to try. If we don’t try to estimate the cost then we assume the cost is Zero.

We know that there is not zero cost.

Full disclosure – I was once a “Forplan guru.” So I’m familiar with and wary of “marvelous toy” thinking. The basic lesson I took from that was that every modeler should understand and disclose the limitations of their model to those would use its predictions. In particular, there should be sensitivity analysis of assumptions (which should lead to research to replace important assumptions with facts). But I’m not sure I get the point here. Any decision-maker has to make assumptions about how things work and how the future will change, and at least a model explicitly specifies them and allows them to be analyzed. The only (interrelated) questions seem to me to be how much to invest in modeling, and how to use the results.