Patrick Brown of The Breakthrough Institute posted this today at The Free Press. It’s about wildfires and climate, and also IMHO does a great job of explaining the climate science system and what gets published. Warning: this is an extraordinarily long post because I believe it’s paywalled. So

This may be surprising to some of you, but to others.. not so much. Here are some excerpts.. I bolded sentences that deal with the theme of “why are the usual sciences that deal with, say, forests or wildfire, often overlooked in climate papers (as is adaptation)?”

I am a climate scientist. And while climate change is an important factor affecting wildfires over many parts of the world, it isn’t close to the only factor that deserves our sole focus.

So why does the press focus so intently on climate change as the root cause? Perhaps for the same reasons I just did in an academic paper about wildfires in Nature, one of the world’s most prestigious journals: it fits a simple storyline that rewards the person telling it.

The paper I just published—“Climate warming increases extreme daily wildfire growth risk in California”—focuses exclusively on how climate change has affected extreme wildfire behavior. I knew not to try to quantify key aspects other than climate change in my research because it would dilute the story that prestigious journals like Nature and its rival, Science, want to tell.

This matters because it is critically important for scientists to be published in high-profile journals; in many ways, they are the gatekeepers for career success in academia. And the editors of these journals have made it abundantly clear, both by what they publish and what they reject, that they want climate papers that support certain preapproved narratives—even when those narratives come at the expense of broader knowledge for society.

To put it bluntly, climate science has become less about understanding the complexities of the world and more about serving as a kind of Cassandra, urgently warning the public about the dangers of climate change. However understandable this instinct may be, it distorts a great deal of climate science research, misinforms the public, and most importantly, makes practical solutions more difficult to achieve.

……………

Here’s how it works.

The first thing the astute climate researcher knows is that his or her work should support the mainstream narrative—namely, that the effects of climate change are both pervasive and catastrophic and that the primary way to deal with them is not by employing practical adaptation measures like stronger, more resilient infrastructure, better zoning and building codes, more air conditioning—or in the case of wildfires, better forest management or undergrounding power lines—but through policies like the Inflation Reduction Act, aimed at reducing greenhouse gas emissions.

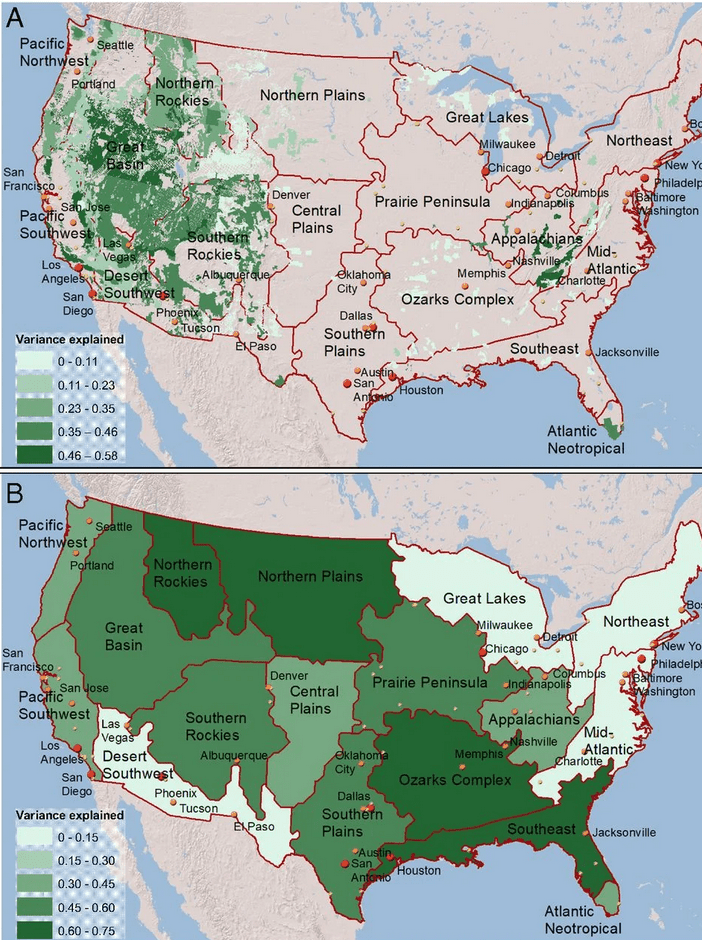

So in my recent Nature paper, which I authored with seven others, I focused narrowly on the influence of climate change on extreme wildfire behavior. Make no mistake: that influence is very real. But there are also other factors that can be just as or more important, such as poor forest management and the increasing number of people who start wildfires either accidentally or purposely. (A startling fact: over 80 percent of wildfires in the US are ignited by humans.)

In my paper, we didn’t bother to study the influence of these other obviously relevant factors. Did I know that including them would make for a more realistic and useful analysis? I did. But I also knew that it would detract from the clean narrative centered on the negative impact of climate change and thus decrease the odds that the paper would pass muster with Nature’s editors and reviewers.

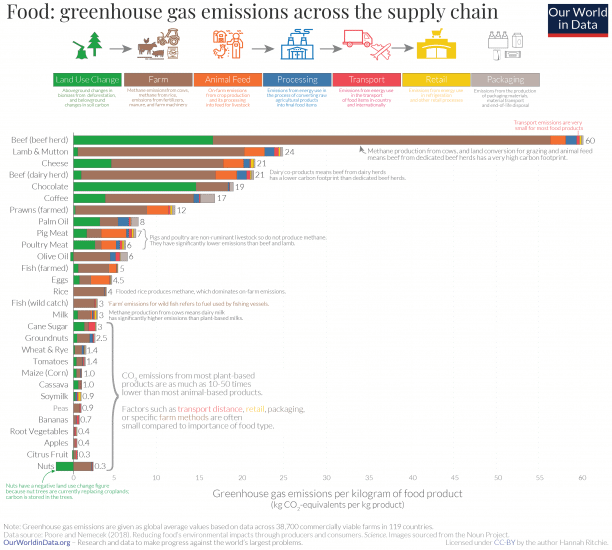

This type of framing, with the influence of climate change unrealistically considered in isolation, is the norm for high-profile research papers. For example, in another recent influential Nature paper, scientists calculated that the two largest climate change impacts on society are deaths related to extreme heat and damage to agriculture. However, the authors never mention that climate change is not the dominant driver for either one of these impacts: heat-related deaths have been declining, and crop yields have been increasing for decades despite climate change. To acknowledge this would imply that the world has succeeded in some areas despite climate change—which, the thinking goes, would undermine the motivation for emissions reductions.

This leads to a second unspoken rule in writing a successful climate paper. The authors should ignore—or at least downplay—practical actions that can counter the impact of climate change. If deaths due to extreme heat are decreasing and crop yields are increasing, then it stands to reason that we can overcome some major negative effects of climate change. Shouldn’t we then study how we have been able to achieve success so that we can facilitate more of it? Of course we should. But studying solutions rather than focusing on problems is simply not going to rouse the public—or the press. Besides, many mainstream climate scientists tend to view the whole prospect of, say, using technology to adapt to climate change as wrongheaded; addressing emissions is the right approach. So the savvy researcher knows to stay away from practical solutions.

Here’s a third trick: be sure to focus on metrics that will generate the most eye-popping numbers. Our paper, for instance, could have focused on a simple, intuitive metric like the number of additional acres that burned or the increase in intensity of wildfires because of climate change. Instead, we followed the common practice of looking at the change in risk of an extreme event—in our case, the increased risk of wildfires burning more than 10,000 acres in a single day.

This is a far less intuitive metric that is more difficult to translate into actionable information. So why is this more complicated and less useful kind of metric so common? Because it generally produces larger factors of increase than other calculations. To wit: you get bigger numbers that justify the importance of your work, its rightful place in Nature or Science, and widespread media coverage. *

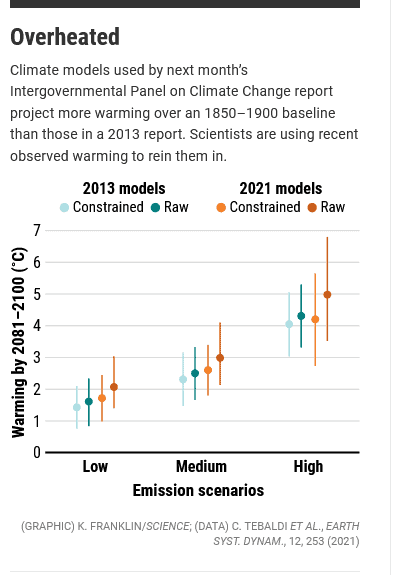

Another way to get the kind of big numbers that will justify the importance of your research—and impress editors, reviewers, and the media—is to always assess the magnitude of climate change over centuries, even if that timescale is irrelevant to the impact you are studying.

For example, it is standard practice to assess impacts on society using the amount of climate change since the industrial revolution, but to ignore technological and societal changes over that time. This makes little sense from a practical standpoint since societal changes in population distribution, infrastructure, behavior, disaster preparedness, etc., have had far more influence on our sensitivity to weather extremes than climate change has since the 1800s. This can be seen, for example, in the precipitous decline in deaths from weather and climate disasters over the last century. Similarly, it is standard practice to calculate impacts for scary hypothetical future warming scenarios that strain credibility while ignoring potential changes in technology and resilience that would lessen the impact. Those scenarios always make for good headlines.

A much more useful analysis would focus on changes in climate from the recent past that living people have actually experienced and then forecasting the foreseeable future—the next several decades—while accounting for changes in technology and resilience.

In the case of my recent Nature paper, this would mean considering the impact of climate change in conjunction with anticipated reforms to forest management practices over the next several decades. In fact, our current research indicates that these changes in forest management practices could completely negate the detrimental impacts of climate change on wildfires.

Another way to get the kind of big numbers that will justify the importance of your research—and impress editors, reviewers, and the media—is to always assess the magnitude of climate change over centuries, even if that timescale is irrelevant to the impact you are studying.

* When I first saw Patrick’s paper on TwitX, I thought “why on earth did they pick that bizarre variable when we know total acres haven’t gone up? I even wondered, skeptic that I am, if they had looked at a lot of other variables that didn’t work out for the Preferred Narrative.

**************

I think the article might be paywalled. So here’s more. I think I’ve tried to say this in the past but much less articulately..

**************

For example, it is standard practice to assess impacts on society using the amount of climate change since the industrial revolution, but to ignore technological and societal changes over that time. This makes little sense from a practical standpoint since societal changes in population distribution, infrastructure, behavior, disaster preparedness, etc., have had far more influence on our sensitivity to weather extremes than climate change has since the 1800s. This can be seen, for example, in the precipitous decline in deaths from weather and climate disasters over the last century. Similarly, it is standard practice to calculate impacts for scary hypothetical future warming scenarios that strain credibility while ignoring potential changes in technology and resilience that would lessen the impact. Those scenarios always make for good headlines.

A much more useful analysis would focus on changes in climate from the recent past that living people have actually experienced and then forecasting the foreseeable future—the next several decades—while accounting for changes in technology and resilience.

In the case of my recent Nature paper, this would mean considering the impact of climate change in conjunction with anticipated reforms to forest management practices over the next several decades. In fact, our current research indicates that these changes in forest management practices could completely negate the detrimental impacts of climate change on wildfires.

This more practical kind of analysis is discouraged, however, because looking at changes in impacts over shorter time periods and including other relevant factors reduces the calculated magnitude of the impact of climate change, and thus it weakens the case for greenhouse gas emissions reductions.

You might be wondering at this point if I’m disowning my own paper. I’m not. On the contrary, I think it advances our understanding of climate change’s role in day-to-day wildfire behavior. It’s just that the process of customizing the research for an eminent journal caused it to be less useful than it could have been.

This means conducting the version of the research on wildfires that I believe adds much more practical value for real-world decisions: studying the impacts of climate change over relevant time frames and in the context of other important changes, like the number of fires started by people and the effects of forest management. The research may not generate the same clean story and desired headlines, but it will be more useful in devising climate change strategies.

But climate scientists shouldn’t have to exile themselves from academia to publish the most useful versions of their research. We need a culture change across academia and elite media that allows for a much broader conversation on societal resilience to climate.

The media, for instance, should stop accepting these papers at face value and do some digging on what’s been left out. The editors of the prominent journals need to expand beyond a narrow focus that pushes the reduction of greenhouse gas emissions. And the researchers themselves need to start standing up to editors, or find other places to publish.

What really should matter isn’t citations for the journals, clicks for the media, or career status for the academics—but research that actually helps society.

Why would we do that? (Just joking, I am a proud graduate of land-grant institutions with that explicit mission).

*******************************

********************************

Here’s the beginning of the piece:

If you’ve been reading any news about wildfires this summer—from Canada to Europe to Maui—you will surely get the impression that they are mostly the result of climate change.

Here’s the AP: Climate change keeps making wildfires and smoke worse. Scientists call it the “new abnormal.”

And PBS NewsHour: Wildfires driven by climate change are on the rise—Spain must do more to prepare, experts say.

And The New York Times: How Climate Change Turned Lush Hawaii Into a Tinderbox.

And Bloomberg: Maui Fires Show Climate Change’s Ugly Reach.

I am a climate scientist. And while climate change is an important factor affecting wildfires over many parts of the world, it isn’t close to the only factor that deserves our sole focus.

So why does the press focus so intently on climate change as the root cause? Perhaps for the same reasons I just did in an academic paper about wildfires in Nature, one of the world’s most prestigious journals: it fits a simple storyline that rewards the person telling it.

The paper I just published—“Climate warming increases extreme daily wildfire growth risk in California”—focuses exclusively on how climate change has affected extreme wildfire behavior. I knew not to try to quantify key aspects other than climate change in my research because it would dilute the story that prestigious journals like Nature and its rival, Science, want to tell.

This matters because it is critically important for scientists to be published in high-profile journals; in many ways, they are the gatekeepers for career success in academia. And the editors of these journals have made it abundantly clear, both by what they publish and what they reject, that they want climate papers that support certain preapproved narratives—even when those narratives come at the expense of broader knowledge for society.

To put it bluntly, climate science has become less about understanding the complexities of the world and more about serving as a kind of Cassandra, urgently warning the public about the dangers of climate change. However understandable this instinct may be, it distorts a great deal of climate science research, misinforms the public, and most importantly, makes practical solutions more difficult to achieve.

Why is this happening?

It starts with the fact that a researcher’s career depends on his or her work being cited widely and perceived as important. This triggers the self-reinforcing feedback loops of name recognition, funding, quality applications from aspiring PhD students and postdocs, and of course, accolades.

But as the number of researchers has skyrocketed in recent years—there are close to six times more PhDs earned in the U.S. each year than there were in the early 1960s—it has become more difficult than ever to stand out from the crowd. So while there has always been a tremendous premium placed on publishing in journals like Nature and Science, it’s also become extraordinarily more competitive.

In theory, scientific research should prize curiosity, dispassionate objectivity, and a commitment to uncovering the truth. Surely those are the qualities that editors of scientific journals should value.

In reality, though, the biases of the editors (and the reviewers they call upon to evaluate submissions) exert a major influence on the collective output of entire fields. They select what gets published from a large pool of entries, and in doing so, they also shape how research is conducted more broadly. Savvy researchers tailor their studies to maximize the likelihood that their work is accepted. I know this because I am one of them.

Disclaimer: I’ve had convos with various folks at The Breakthrough Institute and will be moderating a panel at an upcoming conference of theirs, but have never spoken to Patrick.