The last panel at the Science Forum talked about the latest thinking in how to do planning, bringing together the information from the first four panels.

Clare Ryan  from the University of Washington spoke about policy design and implementation, especially best science. (slides pdf) When thinking about policy, we think about policy goals contained in the policy itself. We think about the tools you have to implement the policy, and we think about the organizations and the resources. Ryan described a three legged stool for policy implementation for the program or policy. The first leg is the idea of a sound theory, one that is relevant and relates to management actions. This leg seems pretty firm, but the sound theory needs to focus on process principles and what more can be done on how to implement them. The next leg is managerial and political skill. Leadership needs to be committee to the statutory goals . Ryan says to pay attention to this leg of implementation: leadership should invest in training, mentoring, career development to build capacity, and providing resources and incentives to recognize and reward. The last leg is support. Ryan thinks the Forest Service has struggled with this leg. A policy should be supported by constituent groups, legislatures and the courts. To build support, focus on communication, engage people early in process, and make materials easily available and understandable. Use partnerships, collaboration, and other tools. The decision making needs to be consistent with NEPA, APA and other policies, which will build support. Ryan said that there are both statutory and non-statutory elements (managerial skill and support) to this three legged stool.

from the University of Washington spoke about policy design and implementation, especially best science. (slides pdf) When thinking about policy, we think about policy goals contained in the policy itself. We think about the tools you have to implement the policy, and we think about the organizations and the resources. Ryan described a three legged stool for policy implementation for the program or policy. The first leg is the idea of a sound theory, one that is relevant and relates to management actions. This leg seems pretty firm, but the sound theory needs to focus on process principles and what more can be done on how to implement them. The next leg is managerial and political skill. Leadership needs to be committee to the statutory goals . Ryan says to pay attention to this leg of implementation: leadership should invest in training, mentoring, career development to build capacity, and providing resources and incentives to recognize and reward. The last leg is support. Ryan thinks the Forest Service has struggled with this leg. A policy should be supported by constituent groups, legislatures and the courts. To build support, focus on communication, engage people early in process, and make materials easily available and understandable. Use partnerships, collaboration, and other tools. The decision making needs to be consistent with NEPA, APA and other policies, which will build support. Ryan said that there are both statutory and non-statutory elements (managerial skill and support) to this three legged stool.

Ryan talked about the consideration of best science. In policy design, she encouraged approaches that define the characteristics of best science, encourage joint fact finding (collaborative research), practice adaptive governance, and make process principles high priority. Make the decisionmaking processes a high priority. Ryan talked about the critical area policy in the State of Washington, which requires counties and cities to include best available science in policies for critical areas. The counties and cities are given assistance in defining best science, including peer review, methods, inference, analysis, context, references, sources, as well as criteria for obtaining best science, incorporating best science, and identifying inadequate science.

Ryan advocated the use of joint fact finding and collaborative research as a potential alternative to adversarial science. People can work together to develop issues, data and analysis, and apply informations to reach decisions. There are some situations where this is more or less useful.

Ryan also mentioned the practice of adaptive governance (see related post on this blog), where science doesn’t drive the policy. Often there aren’t single target policies. Policies are also resilient (for instance the 1872 mining law is still around). The policies drive your decision making. The process explores what goals we are managing for. The decision making involves local nongovernmental organizations and communities to determine the goals in a collaborative process. The science helps monitor the goals, and you consider uncertainty and new information.

The process principles must be high priority. Many challenges to agency decisions are about the process. The process must be supported internally, through training, resources and rewards. Ryan urges the development of patterns and communities of practice. She mentioned that the NEPA for the 21st Century initiative has good info on best practices.

Ryan suggested several ways to address uncertainty and the need for flexibility. Where it’s difficult to hold people to agreements, consider contingent agreements (if then agreements). She said that planning processes should not be viewed as an end, but rather a beginning of continued long-term interactions. She also mentioned the need for new institutions, including partnerships and coordinating councils.

Martin Nie from the University of Montana, and one of the administrators of this blog, spoke from his prepared remarks. He said that the right questions are being asked in the NOI, questions about institutions, process, scale, use of science, and accountability. He said that we must appreciate the limitations of planning. We need a broader analysis of the system’s statutory and regulatory framework, and we need to revisit the cumulation of laws. A National Forest Law Review Commission should be convened in the near future. The planning rule needs to implement the spirit and letter of our laws. These should be viewed as goals not constraints. Then we should turn to the question of doing it in a more effective way.

Nie said that the planning rule needs to be informed from the bottom up. We need to look at how planning is being done at the local level. There is little value if no decisions are made in plans. Legally binded and enforceable standards should be included. These standards will help adaptive management – the purpose and boundaries of the process. Without standards, adaptive management creates dodging. We need legal sideboards for collaboration. There is a tendency to try to maximize discretion like the 2005 rule, using the Supreme Court Ohio Forestry and SUWA cases as justifications. It appears that the courts will grant discretion, but agency should think carefully. Place based group want more certainty and less discretion to the agency. Plans have been viewed as contingent wish list. But the agency has overpromised and underdelivered. This mismatch creates distrust. Plans should be realistic.

Plans should be collaborative, but we must give the purpose of the collaboration. There have been mixed signals sent in the past and incompatible expectations. We need to formalize collaboration, using things like advisory boards, advisory committees. We need to encourage development of collaboratively written alternatives in NEPA processes. Nie said that the Forest Service needs a national advisory committee for outside advice- not another Committee of Scientists, but one that can deal with a more inclusive set of problems beyond science. There should be technical science committees on subjects like new approaches to viability.

Forest plans should not be bloated. The previous rational planning framework looked at wicked problems as scientific/technical problems. But planning approaches that are more collaborative make sense. We need to describe how to practice collaboration while ensuring accountability and transparency. The 2005 planning rule had an ill-defined adaptive management framework while dropping NEPA requirements and species viability. This got an important dialogue off on the wrong foot. A fully funded and working monitoring program should be implemented. Monitoring is subject to political influence and bias over what will be monitored. Nie advocates the use of prenegotiated commitments if monitoring shows x or y. Predetermined courses should be built into the adaptive framework from the beginning. This might alleviate concerns about the amount of discretion. NEPA presents challenges to adaptive management, since it requires a forward looking approach. Once the general course is set, adaptive management is a means to an end. It needs a purpose and hopefully NEPA is a way to define that purpose.

Nie said that we should pause before dumping more analytical requirements in the planning rule beyond NEPA, to address things like climate change or the quantification of ecosystem benefits. Some of the NOI issues are better dealt with at higher or lower levels. There should be serious thought on how plans and assessment are tiered and integrated, and how we avoid the planning shell game.

He talked about increasing interest in place-based and formalized MOUs. There is considerable attention on the Tester and Wyden bills. There are informative initiatives across the nation. They are all searching for durable bottom-up solutions. For the planning rule, we need to learn lessons from these initiatives. Nie said we need to find out what role the forest plans played in these initiatives. We need to visit these places, so we can be informed as much as possible from the bottom up approaches. There are common themes worth considering : certainty, landscape scale restoration, and formalized decisionmaking. These initiatives appear to be pushing the Forest Service in directions it wants to go. Where problems are being identified by these groups, we can learn lessons about conflict resolution and problem solving from the grass roots.

Tony Cheng  from Colorado State University began with three premises. (slides pdf)

from Colorado State University began with three premises. (slides pdf)

First, National Forest planning is comprised of value judgments (related to choices) about linked social-ecological systems, done in the face of risks, uncertainty and constrained budgets and time. The planning rule is really about governance, the process of making choices. Cheng referred to the phrase “coming to judgment” from the title of Daniel Yankelovich’s book. NFMA specifies some types of choices/judges to be made.

Second, National Forest planning assumes that there is sufficient institutional infrastructure to support it. The original motivation of RPA was that budgets would follow planning.

Third, National Forest plan development and implementation is a shared burden. The Forest Service has insufficient capacity to address wicked problems, which Forest Planning has been described as far back as 1983. Cheng said that formal partnerships are needed.

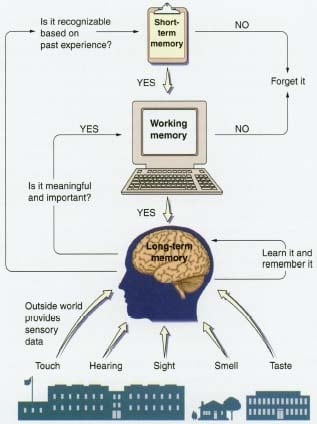

Cheng said that NFMA has the philosophical underpinning of rational comprehensive planning. One assumption is that good information will lead to good decisions, so more good science will lead to better decisions. It’s not inherently wrong to make those assumptions, but subsequent work in behavior decisionmaking and institutional analysis has found that good decisions are a function of a well structure process as well as the institutions to sustain those processes.

Planning is making decisions under risk and uncertainty. Risk is the probability of an occurrence times the magnitude of the consequences of the event. One of the challenges is we don’t know whose consequences – there are many consequences. The planning rule can’t just do risk assessment, but we also have to look at risk decisionmaking – this is a function of risk preference, the values of the consequences, and cognitive and institutional biases. As we think about the collaborative processes, participants come in with a whole portfolio of risk preferences, not just about ecological or resource conditions, but sometimes identity, reputations, and professional careers. The decision about which science to use will already bias the process.

Cheng said we know how to do a good decision process – we just need to do it. It includes defining the decision space, making a fair inclusive process, and focusing on systems thinking. We shouldn’t think about a desired conditions but a range of desired states. We should encourage deliberation and social learning. Accountability is a fuzzy term like resilience, with lots of meaning in political science, so it must be determined. The Forest Service has it’s own resources – like the National Collaboration Cadre, that uses a pure learning approach. Cheng also referenced the work of the U.S. Institute for Environmental Conflict Resolution. He added that the features of a good decision process include standards to avoid being arbitrary and capricious, analysis sufficient for a reasoned choice, and ways to deal with a lack of science information.

Cheng cited work from the Uncompahgre plateau in Colorado, with multiple agencies and public groups. It had systems views and assessments, including an RMLANDS model. The communities in the public lands partnership also developed their own assessments. They worked on goals collaboratively, and issues were scaled down to specifics. The Colorado Forest Restoration Institute put together collaborative joint fact finding. This was similar to the work that Tom Sisk described in the Science Forum Panel 1, but at a smaller scale. They looked at fire regimes, and data was collected by stakeholders. Social, economic, and ecological monitoring was formalized in an NOI. The NEPA decision after the NOI was not appealed or litigated.

Cheng described the features of supportive institutions, emphasizing that good process is not enough. He quoted Elinor Ostrom who says that institutions matter (see related post on this blog). Budgets, performance measures, and consequences need to be tied to plan outcomes. What is measured is what will get done. Other features of supportive institutions include formal and informal networks, Statewide strategies, nested enterprises, boundary spanning and bridging organizations like the Southwest Ecological Restoration Institute, and open access information systems like database sharing. Lastly, there should be formalized structures and mechanisms for learning loops: single-loop learning answers the question if the intervention worked. Double-loop learning focuses on the validity of the assumptions about how the system works.

In followup questions, Cheng also talked about statewide assessments and strategies. He explained the Forest Service State and Private redesign after the Farm Bill, which looked at restructuring how federal funds are distributed to states for forest stewardship needs. It requires state strategies and assessments. For states with large interface acres like Colorado, with issues like insects, wildfires, and inherent transboundary issues, there is no mechanism for integrating statewide assessment into forest plans. Plus there is a pool of funds for cross boundary objectives. The Forest Landscape Restoration Act has a requirement for a non-federal match. Several things are moving toward an all lands cross boundary approach. The road has been laid out but it seems we’re trying to catch up.

Cheng had a parting question for the planning rule team: is the institutional infrastructure for this rulemaking adequate, and is the planning rule the place for addressing the issues in the NOI?

Chris Liggett,  the planning director in the Southern Region, spoke about some of the planning tools his Region has been using. (slides pdf) He began by briefly mentioning the iterative alternative development process used for the Southern Appalacian plans: this “rolling alternative” NEPA approach is now endorsed by CEQ. He also talked about consistent standards across all forest plans.

the planning director in the Southern Region, spoke about some of the planning tools his Region has been using. (slides pdf) He began by briefly mentioning the iterative alternative development process used for the Southern Appalacian plans: this “rolling alternative” NEPA approach is now endorsed by CEQ. He also talked about consistent standards across all forest plans.

Liggett listed several requirements for planning tools: openness, collaboration, practicality (ease of use), stakeholder support, efficiency, and durability (despite changes in governing regulations). He said that planning tools have shed the “black box” image of previous planning models. He said that plans need to be intentional and describe a trajectory to achieve desired outcomes. He said that tools are available to move us toward openness, transparency and consistency, and maintaining scientific rigor.

He talked about the Ecological Sustainability Evaluation Tool (ESE) developed by the Southern Region, based on The Nature Conservancy’s Conservation Action Planning (CAP) workbook. It allows for conservation targets, and links ecosystem and species targets. Liggett said that it’s easy to develop ecosystem and species targets. Within the ESE process, you select conservation targets, identify key attributes, identify indicators (including species), set indicator rating criteria, assess current conditions, and develop conseration strategies (converted in ESE to forest planning language). These steps are done collaboratively. The Ozarks/Ouachita used this process with assistance from NatureServe and the Arkansas Game and Fish. Now the ESE has formed that backbone of their planning process. The ESE planning steps reside in an access database, with tabs for each step in the process.

Liggett also talked about TACCIMO, mentioned by Steve McNulty in the Science Forum Panel 2. It allows managers to review current climate change forecasts and threats, match them with management options, and determine how they may impact forest management and planning. This is a relational database sitting on the web, which gives you reports and maps and has feedback loop. Statements from forest plans are clipped into the data base.

He also mentioned the Forest Service Human Dimensions Toolkit, used to display economic and social science assessment information. It’s a web-based product which produces reports and secondary social and economic information from BEA. It’s the first cut at an expert system.

The last panelist was Mike Harper,  a member of the National Association of County Planners, recently retired from Washoe County in Reno, Nevada. (slides pdf) Harper said that citizens are often more familiar with local planning processes than the federal process, so it’s good to think about using similar approaches. As a professional planner, Harper provided some general observations. First, planning is not a science – rather, it is process oriented, and an integration of vision, goals, policy and process. Second, plan making is not regulation – rather, the primary focus is vision, goals and policy. A vision is aspiring – it is a touchstone of a plan. Goals define the vision parameter. Third, Harper said that “end state” plans are rarely viable. Planning involves politics, adaptive management is limited, and plans are out-of-date on the day of adoption. Finally, Harper said that non-implementable plans are not worth the effort – no one will stay engaged.

a member of the National Association of County Planners, recently retired from Washoe County in Reno, Nevada. (slides pdf) Harper said that citizens are often more familiar with local planning processes than the federal process, so it’s good to think about using similar approaches. As a professional planner, Harper provided some general observations. First, planning is not a science – rather, it is process oriented, and an integration of vision, goals, policy and process. Second, plan making is not regulation – rather, the primary focus is vision, goals and policy. A vision is aspiring – it is a touchstone of a plan. Goals define the vision parameter. Third, Harper said that “end state” plans are rarely viable. Planning involves politics, adaptive management is limited, and plans are out-of-date on the day of adoption. Finally, Harper said that non-implementable plans are not worth the effort – no one will stay engaged.

Regarding ways to “foster collaborative efforts”, Harper suggested identifying and mapping where the affected audience lives and what their issues are. He also suggested identifying state and local planners who know their audience and can provide info and perspective. He encourages interactive programs to collect and organize and respond to comments (web based processes), He says it’s important to establish an initial time table and keep to it, focusing on key issues.

Regarding information sources, Harper said to: engage state and local organizations; encourage web links; encourage unified organizational responses; directly engage public on issues not normally managed at state or local levels; and directly engage state local government on issues. He said KISS – keep the modeling simple – this might be ways to obfuscate the issues. Also, if you’re going to use planning language then define it for your audience.

Regarding the question of accounting for the relationship between National Forest System lands and neighboring lands, Harper observed that all enabling legislation in states require elements of conservation, economic, recreation, public facilities and services in master plans. He suggested partnering with local and regional organizations to help sponsor the process. The Feds are not always looked like they are close to locals. Local organizations can sponsor planning activities, and provide information both in and out of forest boundaries.

Harper also commented on the idea of a creation of a shared vision. The planning rule should consider a national vision for forests and grasslands, and the local units should tier off the national vision. He also recommending looking at visions in local plans that you are consulting with. All the area plans typically have vision statements, and they usually include National Forest System lands.

He said that the planning process should define subjects, public partication procedures, and compliance with statutory requirements. Ideally it should allow flexibility at local level.

Plans typically do contain both the strategic (vision and goals) and the tactical (policies). Plan making involves choices. Don’t approve projects or activities in the plan, because participants will focus on those projects and will forget about the vision or goal part of your planning process. The capital approvement project shold follow. The planning process moves from the general to specific. There needs to be an aspirational nature to planning, but you need to get to the guidance portion (where the meat and bones are). Plans identify ideals defined by present. Circumstances change. Emperical data is crucial – no amount of data collection and modeling will substitute for good planning, represented by the goals and the strategies.

Discussion

The Need for Certainty

In followup questions, that panel was asked about the need for certainty in Forest Plans. Nie had said that standards were necessary, while Liggett preferred a focus on desired conditions. Nie said the zoning approach resonates with people – not hyper zones like some of the management area allocations in existing plans, but a sense of what can be done. Harper said be careful about using the term “zoning”, which in the county context implies regulation. The general public might define Forest Service terms differently. Nie thought that people are used to Forest Planning, and they want certainty.

Harper observed that in the planning world, master plans and zoning are different things. If you want to involve the public, then define zoning. Liggett said that implementing NFMA has been a struggle, because it requires us to have all the pieces of planning mashed together. Even if we can just separate the pieces temporarily into phases as we prepare the overall plan, we’d have greater acceptance and understanding.

Cheng characterized plans as a scene from today. The 1982 rule provided certainty with timber suitability. But there are other ways to obtain certainty than drawing lines on a map. Nie said that’s why we need a new planning rule. Looking at Tester’s bill, locals have expressed a sense of frustration. They wanted a clear commitment from the Forest Service about a stable land base, and they can’t get that from strategic and aspirational document. The alternative course of action is Congress and that’s not the best either.

Ryan also mentioned how NEPA associated with plans has been seen as a problem for the Forest Service. She said that NEPA has not been the problem; rather, the Forest Service has been losing on procedural grounds for not violating plans.

Liggett was asked to explain the distinctions he was making between desired conditions, guidelines, and standards. He said that desired conditions are word pictures – descriptions of what we want the forest to look like in an ideal state – not necessarily a future state – they could be a current state. There is some disagreement about their detail, but they are really there to describe the vision. NFMA refers to standards and guidelines as part of management prescription. Over the years some space opened up between the terms standards and guidelines. Guidelines evolved to be a more flexible variant of a standard, a little softer. During project planning they are reviewed on the ground. Standards and guidelines are all statements that apply to projects – they have no function if you aren’t doing any projects.

Liggett was asked why implementation in the Southern Appalachian plans has been spotty. At the project level, guideline that produce outputs seem to be followed, while guidelines might not be followed for things like old growth survey or designation. Liggett said that plans can be subject to misapplication. He also added that in a lot of plans under the 1982 rule, you find a lot of spillage between the different section of the plan – objectives look like desired conditions, guidelines read like monitoring requirements. The last rule attempted to provide definitions to those components – so they would actually work together. Getting the number of standards pared down is the first step. There is still a lot of variation in how plans are being used. Some line officers understand planning.

Harper said when deciding if you have a good plan or a bad plan, a good plan has citizens that actually show up and support it. When people try to change it, you have people defending it, or at the very least asking for the justification for the change. The mediocre plan has initial attention then put on the shelf – the people who abuse it the most are the people that run it. The poor plan is on the shelf and gathers dust.

An audience member observed that standards and guidelines come from the expertise of the interdisciplinary team who has worked out best practices. That represents the best knowledge of the agency. If they don’t belong in forest plans then where? Liggett said that we need standards and guidelines, but as long as they are clearly identified as such. They represent guidance for project and activity decisionmaking. The Fish and Wildlife Services uses the term “standards” to mean different things than what the Forest Service uses, so you can get wrapped up in the nomenclature. Planning practice has evolved – they may not have been effective. We have this paradigm about standards as the only measure of accountability. But there are the other ways of accountability. As far as standards being working knowledge, Liggett said that they really seem to be describing a desired condition or an objective. If you do that instead of writing it as a standard, it will be stronger or better.

Cheng said that a collaborative review of standards can help everyone understand the operational constraints that the Forest Service is working under. He described the Wyoming cooperating agency structure to deal with local governments. The Bighorn planning team worked with the cooperating agency mechanisms for a 3-4 week process. The process was a working battleground, but in the end the participants were able to understand the constraints. That event turned the local cooperators into champions of the plans.

How much should the public be engaged?

The panel was asked the question about how not to burn out the public, while keeping them engaged. Ryan suggested looking at planning as building partners. When the planning process ends, all the material is gone on the web – which is a problem. The engagement needs visible updates. Think about workshops for updates on monitoring – report cards are opportunities for further engagement. Harper said that annual reports are very helpful. It’s good to reengage the public, and independent organizations can see if the Agency is telling the truth.

Cheng said that the planning rule needs to identify what it expects planning teams to do as they participate and coconvene collaborative processes. The planning rule has antecedents in the Administrative Procedures Act, providing a clear basis of choice. If there is not a clear basis of choice – there is the burden to get the evidence they need for a clear basis of choice. Collaborative processes require a certain level of capacity and competency, which is lumpy throughout the country. The rule could say that the Agency should use resources it has available.

Ryan agreed that the rule should define the characteristics of the process. The agency is already held accountable for collaboration through litigation.

Cheng said that Forest planning is not the place to start collaboration. It’s like taking a beginning skier to a double black diamond run. You can’t do that in a forest plan with so many moving parts. There is a tremendous amount of capacity building with project level planning – you build at project level and draw on those networks at the project level context. He described the complexities of the GMUG Forest planning process. The pre-NEPA process began in December 2000, with the last collaborative process in fall of 2004, even before they got to scoping. There was significant adaptation, and a lot of bumps along the road. Unfortunately, the GMUG plan has never seen the light of day, with so much disappointment of participants and stakeholders.

Liggett said that sometimes collaboration is not the right thing to do, citing the work of Ron Heifetz at the Kennedy School of Government. Planning ranges from simple problems with known existing solutions, to situations where you don’t know the problems or the solutions (the wicked problems.) Collaboration is one way to approach wicked problems, but it can be inadequate to the task if you don’t frame the problem correctly – if you try to address things not answered due to inaction or malfeasance – these should be sorted out by congress or some institutional thing. You have people thrashing things out on the ground. We need to ask if we have not framed this, bitten off more than we can chew, or not having the capacity. Forest planning falls all over this spectrum.

Levels of Planning

Responding to a question about what should be in the rule, and what should be in a plan, Ryan said that Forest plans must have some details. The rule may have broader principles, but the details should be in the plan. Ryan also said that we can’t get too specific at the national level about what is valid science. Part of the collaborative process is making a collective judgement about what is good science – that could be incorporated into the rule.

Liggett said that we need a multi-scale framework for planning. In the past, the Forest Service has tried to characterize a two-staged process (forest plans and projects/activities.) But there really is a planning framework that is multi-scale. There may be five levels – national, broad scale (at least assessment work), forest, slice of a forest like a watershed or group of watersheds, then the project. We get very confused at the levels.

Cheng commented that if plans are just aspirational and there is no intent for actually allocating resources, you’re not going to get people to participate. Liggett said that plans also need more nuts and bolts stuff, but NFMA creates plans that look like they’re done by a committee. Maybe we need the parts separate steps. Harper said that in local planning, it’s clear where the steps are. If projects are included in plans, people will lock in on specific projects and the whole planning process will get skewed. Nie said that his participation on this New Century blog (see Andy Stahl’s KISS posts) has changed his view on this. As long as plans contain a strategy that is clearly coordinated, and the parts are integrated, he is open to the idea of having oil and gas leasing, travel management, and other decisions outside of planning. Liggett agreed, but observed that these separate activity decisions will splinter the planning approach. We need to resist this, but don’t put it all on the back of forest plans. We need to find balance.

One audience participant commented that back in the 70s he was on the Society of American Foresters’ committee to address the adverse Monongahela decision on clearcutting. The committee’s recommendations got instituted into NFMA. The road was paved with good intentions. He joked that he thought that the theory would work, but he encouraged everyone not to give up hope.

National Audience Concern about the Control of Local Participants

Questions were asked about how to involve interested parties not living in the local area. Harper reiterated that mapping the location of interested citizens is important, so you don’t miss the non-locals. Liggett said there are inherent dangers, but line officers are part of a national organization, so they are well schooled in this. Cheng said that there are still standards for avoiding arbitrary and capricious decisions. There is accountability to not overly rely on any one source of information. Cheng takes a more empirical perspective on this philosophical concern – what really is the frequency that leads to collaborative processes that lead to agency capture. Was the Tester bill collaborative? – some people will disagree because of the multiple points of entry. There are legitimate claims against anything we come up with, but democracy is better than any other alternative.

Nie said that this is a tension that needs to be balanced. There is a larger statutory framework with the safe boards and side boards (NEPA, NFMA). For the rule development, perhaps the most constructive way to think about this is to marry the safeguards from the top down with the good ideas of the bottom up. Bottom up innovation pragmatism, innovation, community. Top down – standards.

Ryan said that there are many examples of politics just as ugly at the national level than local. We see good examples of innovation at the state and local level and don’t want to miss those – climate, energy, greenbuilding – which happen sooner at the local level.

Collaboration for the Planning Rule

The panel was asked about the 11/11/11 target for completing the planning rulemaking process, given the desire to collaborate.

Liggett mentioned that the Land Between the Lakes plan was done in 17 months and 27 days. He suggested that the rule can be done quickly. He said that difficult problems don’t take a long time to solve. He said that the LBL had a dedicated planning team. It’s important to frame the questions correctly. If we do that around this rulemaking we can meet the timeframe. Harper said the rule can’t be everything to everyone. There should be some preparatory work and a timeframe to create a sense of urgency. Make a decision – planning is a decisionmaking process.

Cheng asked how much prescriptive substantive goals for all forest plans does the rule want to have. There are fuzzy things still out there. How prescriptive does the rule need to be as a framework as a guidance for choice. The more certainty you think people want – the more prescriptive. On the other hand, the more prescriptive you are at the national level, the more chance for errors.