Structural Problem: Using the Power of Hyperlinks in Scientific Publishing.. or Not.

For those of us who grew up with paper journals, journal article size was circumscribed by paper publishing. Now publishing in journals is online, but complex datasets may well require more “room” to get at how things are calculated and which numbers are used from which data sets. This seems especially true for some kinds of studies that use a variety of other data sets. Without that clarity, how can reviewers provide adequate peer review? As Todd Morgan says:

In some ways, I think journal articles can be a really poor way to communicate some of this information/science. One key reason is space & word count limits. These limits really restrict authors’ abilities to clearly describe their data sources & methods in detail – especially when working with multiple data sets from different sources and/or multiple methods. And so much of this science related to carbon uses gobs of data from various sources, originally designed to measure different things and then mashes those data up with a bunch of mathematical relationships from other sources.

For example, the Smith et al. 2006 source cited in the CBM article you brought to my attention is a 200-page document with all sorts of tables from other sources and different tables to be used for different data sets when calculating carbon for HWP. And it sources information from several other large documents with data compiled from yet other sources. From the methods presented in the CBM article, I’m not exactly sure which tables & methods the authors used from Smith et al. and I don’t know exactly what TPO data they used, how they included fuelwood, or why they added mill residue…

As Morgan and I were discussing this via email, Chris Woodall, a Forest Service scientist, and one of the authors of the study added:

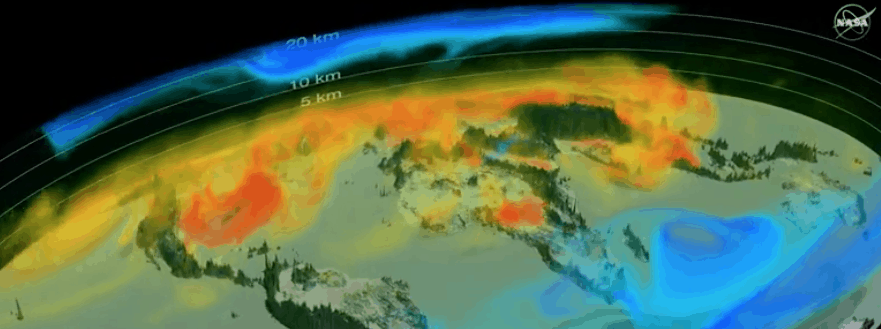

“This paper was an attempt to bring together very spatially explicit remotely sensed information (a central part of the NASA carbon monitoring systems grant) with information regarding the status and fate of forest carbon, whether ecosystem or HWP. We encountered serious hurdles trying to attribute gross carbon changes to disturbances agents, whether fire, wind, or logging. So much so that deriving net change from gross really eluded us resulting in our paper only getting into CBM as opposed to much higher tier journals. The issue that took the most work was trying to join TPO data (often at the combo county level) with gridded data which led us to developing a host of look up tables to carry our C calculations through. “

Woodall brings up two structural problems:

Structural Problem: NASA Funding and the Scientific Equivalent of the Streetlight Effect.

https://en.wikipedia.org/wiki/Streetlight_effect

NASA has money for carbon monitoring based on remote sensing. Therefore, folks will use remote sensing for carbon monitoring and try to link it to other things that aren’t necessarily measured well by remote sensing. Would the approach have been the same if Agency X had funded proposals to “do the best carbon monitoring possible” and given lots of money to collect new data specifically to answer carbon questions?”

Structural Problem: Not all journals are created equal.

But the public and policymakers don’t have a phone app where you type in the journal and it comes out with a ranking. and what would you do with that information anyway? Also, some folks have had trouble publishing in some of the highest ranked journals (e.g., Nature and Science) if their conclusions don’t fit with certain worldviews, and not necessarily that they used incorrect methods, nor that the results are shaky. So knowing the journal can help you determine how strong the evidence is.. or not. But clearly Chris points out that in this case, the research only made it into a lower tier journal. Does that mean anything to policymakers? Should it?