Previously we’ve talked about how scientific research is funded and how researchers may not think that they will be rewarded for studying something that will help out practitioners- because it is not funded nor cool. In some fields, there is not even a directly linkage between practitioners and researchers.

Penalized for Utility

At one time I was asked to be the representative of research users on a panel for the research grade evaluation for a Forest Service scientist. He had been doing useful work, funded by people interested in what he was doing, and seemed to be doing a good job. Now remember that Forest Service research is supposed to study useful, mission-oriented things. One of the researchers on the panel said that the paneled scientist didn’t deserve an upgrade because he was doing “spray and count” research (he was an entomologist). The implicit assumption in our applied work is that excellence is not determined by the user. I asked “but you guys funded him to do this, how can you turn around and say he should be working on something else?” In my own line of work, what was cool was to apply some new theoretical approach from a cooler field and see if it works. I’d also like to point out that I’ve heard this same kind of story from university engineering departments, e.g. “the State (it was a state university) folks asked Jane to investigate how they could solve a problem, but Jane was denied tenure because the kind of research she did wasn’t theoretical enough.”

Beware of Science Tool Fads

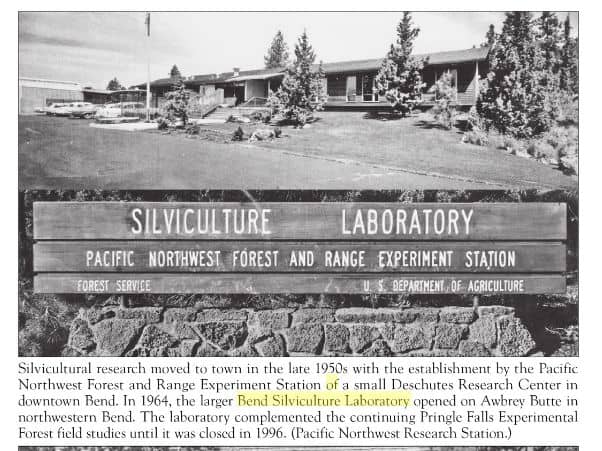

Ecologists did this with systems theory which came from a higher field (math). Sometime in the 90’s, I was on a team reviewing one of the Forest Service Stations. There were some fish geneticists at the Station who had done excellent ground-breaking work learning where trout go in streams. Very important to know for a variety of reasons. The Assistant Director told me that that kind of research was passe, and that in the future, scientists would only study “systems”. But, I asked, how can you understand a system without understanding anything in it? To be fair, GE trees for forest applications were also a fad.

Big Science and Big Money

In 1988, I was at the Society of American Foresters Convention in Rochester, New York, and had dinner with a Station Director. I was a geneticist with National Forests at the time. He said that his station wouldn’t be doing much work related to the National Forests any more, as now their scientists could compete for climate change bucks and do real science. (thank goodness this wasn’t a western or southern Station Director).

I’m not being hard on the Forest Service here, it happens to be where I worked (and FS scientists themselves are generally quite helpful).

Right now I’m thinking of different vectors of coolness. There is the abstraction vector, which may go back to the class-based distinctions from the 19th century that we talked about last time. The more abstract the better. But what you study has a coolness vector as well. Elementary particles are better than rocks, and organisms, and people (social sciences) are at the bottom. Tools have a coolness vector of their own. Superconducting super colliders, great. Satellite data and computer models, lidar and so on, good. Counting or measuring plants, not so cool. Giving people interviews, well, barely science. The feedback from users is another vector. Highest is “what users, we are adding to human knowledge?” somewhere along the line is “national governments should be listening to us, but we’re not asking them what’s important” and finally “yes, we determine our research agenda by speaking to the people with problems.”

There is also some kind of feeling that non-interventional “studying distributions of plants” is better than intervening in the lives of plants. Perhaps that is the old-class based vector of coolness transformed into “environmental” vs. “exploitative.”

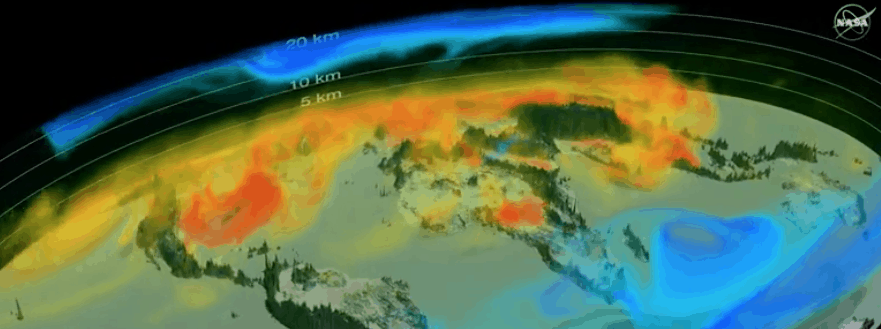

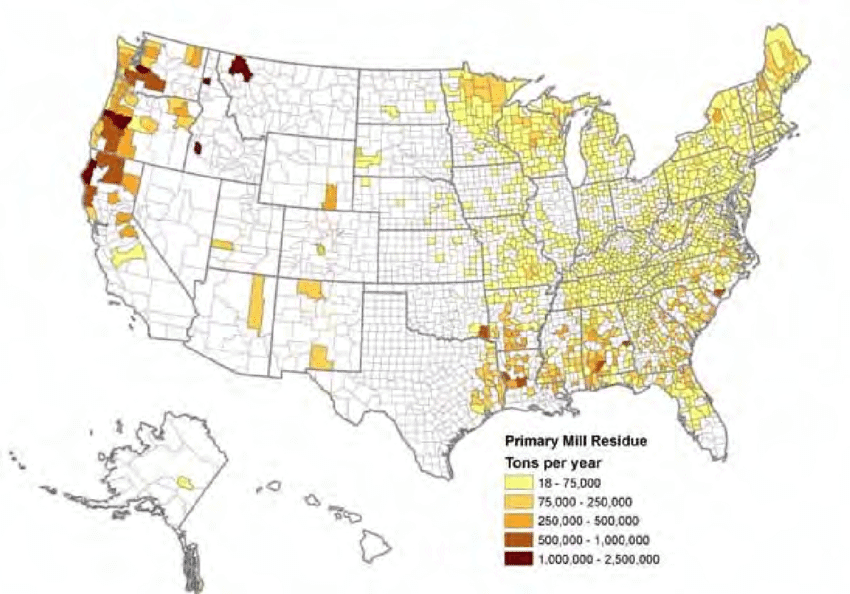

Given that both of these are improving the environment, which do you think is cooler and why?